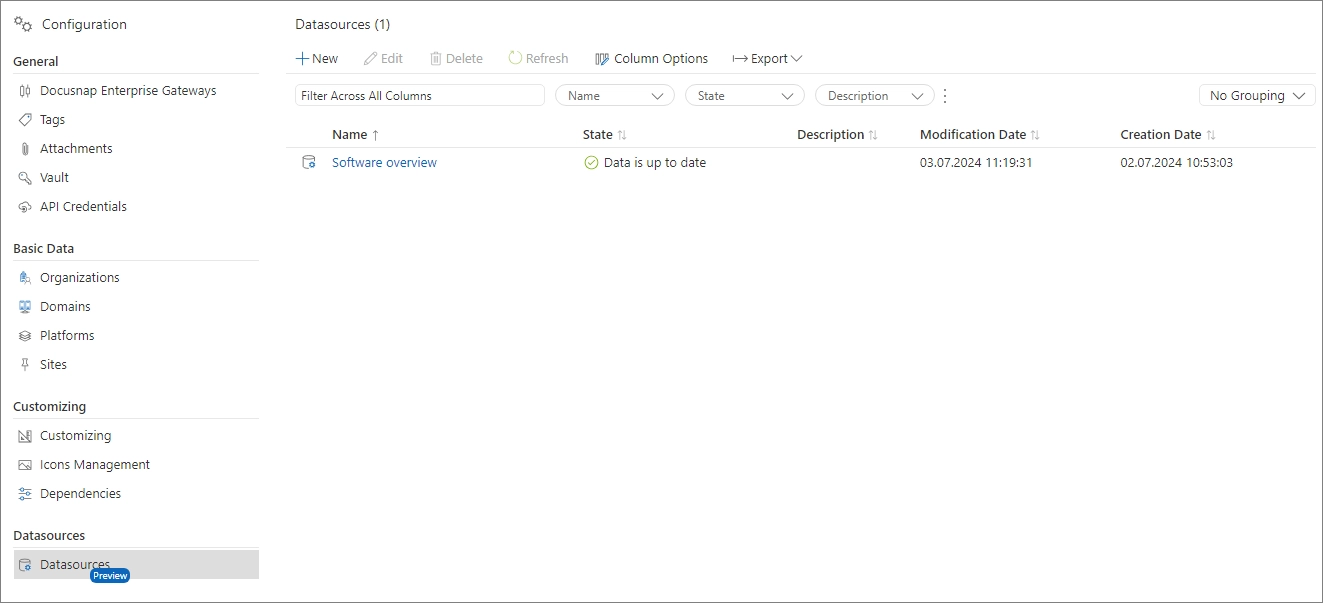

Data Sources

2 minute read

Introduction

In today’s data-driven world, effectively managing and analyzing large volumes of data is essential for gaining valuable insights and making informed decisions. Docusnap365 addresses this need with the introduction of “Data Sources,” which serve as central definitions for extracting, aggregating, and merging data from various sources. This guide explains how to create these definitions in Docusnap365 and how they can be used as a foundation for reports, the REST API, or comprehensive analyses. Currently, data sources are only available for reports and the REST API.

When creating data sources, “Presentations” are used to visualize different object types. A presentation defines what information, including headings, categories, labels, and data, will be displayed. For example, when opening a Windows system in the inventory area, a menu with all categories is presented. If the “Software” category is selected, a list of software products with their specific properties appears. Presentations facilitate the creation of data sources by allowing an intuitive selection of needed information, presenting only the structure without data.

After defining a data source, relevant data is extracted from the original data and stored in separate tables. This process reduces the amount of data needed for analysis by isolating the necessary information, significantly improving performance and reducing resource consumption for report creation and queries over the REST API.

Definition of Terms

The terms Extract, Transform, and Store are central to the creation of data sources.

Extract: Based on the data source definition, selected information is extracted from the original data. For example, specific processor information is extracted from a Windows computer if selected.

Transform: The extracted information is then processed to be suitable for further use. For example, the maximum clock speed of a processor is stored in Hertz and additionally laid out as a readable property, such as 3.5 GHz.

Store: After the extraction and transformation are complete, the data is stored in tables for further use in reports or the REST API. This enables resource-efficient, efficient, and high-performance access to the data.